justineanweiler.com – Computer architecture is the conceptual design and fundamental operational structure of a computer system. It refers to the layout of the system’s components and how they interact with each other to perform tasks. In essence, it is the blueprint that defines how hardware components like the CPU, memory, and input/output devices are integrated to achieve optimal performance and functionality.

Understanding computer architecture is crucial for engineers, programmers, and anyone working in the field of computing because it provides insight into how software interacts with hardware. This article provides an overview of computer architecture, its components, types, and its importance in modern computing.

Key Components of Computer Architecture

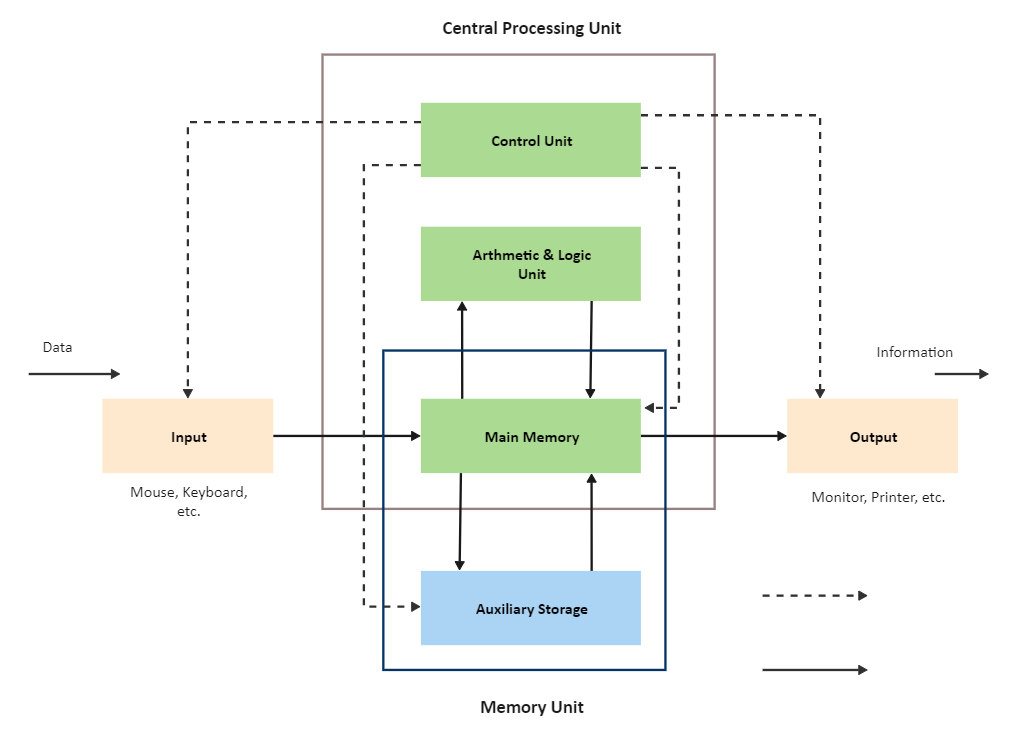

A computer system is typically made up of several key components that are designed to work together to execute instructions. These components can be grouped into the following primary categories:

- Central Processing Unit (CPU): The CPU is the “brain” of the computer and is responsible for executing instructions. It consists of several components:

- Control Unit (CU): Manages and coordinates the execution of instructions by directing the flow of data within the system.

- Arithmetic Logic Unit (ALU): Performs arithmetic and logical operations (e.g., addition, subtraction, comparison).

- Registers: Small, fast storage locations within the CPU that hold data temporarily during instruction execution.

- Memory: Memory refers to the storage areas in the computer where data is stored and retrieved during computation. Memory can be divided into:

- Primary Memory (RAM): Temporary storage that holds data and instructions that are actively being processed. It is fast but volatile, meaning data is lost when the computer is powered off.

- Secondary Memory (Hard Drive, SSD): Long-term storage used to store data and software programs. It is slower than RAM but non-volatile, meaning it retains data even when the computer is turned off.

- Cache Memory: A small, high-speed storage located inside or close to the CPU that stores frequently accessed data to reduce latency.

- Input and Output Devices:

- Input Devices: Allow users to interact with the computer (e.g., keyboard, mouse, scanner).

- Output Devices: Display the results of the computer’s operations (e.g., monitor, printer).

- Bus System: The bus is a communication system that transfers data between the CPU, memory, and input/output devices. It consists of a set of physical lines (wires or tracks on a circuit board) and protocols that define how data is transferred. The bus system typically includes:

- Data Bus: Transfers actual data.

- Address Bus: Carries memory addresses.

- Control Bus: Manages control signals between components.

Types of Computer Architecture

Computer architecture can be classified based on its design philosophy, functionality, and the technology it uses. Below are the primary types of computer architecture:

- Von Neumann Architecture (Stored-Program Architecture): The Von Neumann architecture is the most widely used design for general-purpose computers. It is based on the idea that both data and instructions are stored in the same memory. This means that the CPU fetches data and instructions sequentially from memory, which can result in a bottleneck known as the Von Neumann bottleneck.

- Components:

- A single memory for both data and instructions.

- A control unit, ALU, and registers.

- A single bus for data transfer.

- Components:

- Harvard Architecture: The Harvard architecture is an alternative design where the CPU uses separate memories for data and instructions. This allows simultaneous access to both data and instructions, which can improve performance over the Von Neumann architecture, particularly in specialized applications such as digital signal processing (DSP).

- Components:

- Separate memory for instructions and data.

- Independent data and instruction buses.

- Components:

- RISC (Reduced Instruction Set Computing): RISC architecture is designed around a small, highly optimized set of instructions, where each instruction is executed in one clock cycle. This simplicity allows for faster execution, as fewer cycles are required to execute an instruction compared to more complex instruction sets.

- Features:

- Simple, fixed-length instructions.

- Frequent use of registers to minimize memory access.

- Emphasizes software optimization.

- Examples: ARM, MIPS.

- Features:

- CISC (Complex Instruction Set Computing): CISC architecture, in contrast to RISC, uses a larger set of instructions, many of which can perform multiple operations in a single instruction. While CISC systems can be more powerful, they tend to be more complex and may require more clock cycles to execute instructions.

- Features:

- Complex, variable-length instructions.

- Instructions can perform multiple operations.

- More emphasis on hardware-based optimization.

- Examples: x86 architecture used in most desktop computers.

- Features:

- Parallel and Distributed Architectures: As the demand for higher computing power grows, parallel and distributed architectures have gained prominence. These systems leverage multiple processors or machines working together to perform tasks in parallel, significantly improving performance for certain applications.

- Parallel Architecture: Multiple processors in a single machine working simultaneously to solve different parts of a problem.

- Distributed Architecture: Multiple machines connected over a network, where each machine works on a different part of the task.

Performance and Optimization

The performance of a computer system is often measured by its clock speed, instruction throughput, and latency. However, optimizing computer architecture goes beyond raw performance. Factors such as power consumption, heat generation, and cost are also critical in the design of modern computer systems.

Key techniques for optimizing computer architecture include:

- Pipelining: Allows multiple instructions to be processed simultaneously by breaking down the instruction cycle into discrete stages.

- Superscalar Execution: Uses multiple execution units to execute several instructions in parallel.

- Out-of-Order Execution: Allows instructions to be executed in a non-sequential order to improve CPU utilization.

Conclusion

Computer architecture is a critical field that combines the theoretical foundations of computing with practical, real-world applications. Whether it’s designing a system with a simple Von Neumann architecture or building highly complex parallel systems, understanding the components and principles of computer architecture is essential for advancing computing technology. As we continue to move toward more powerful, energy-efficient, and specialized computing systems, innovations in computer architecture will play a crucial role in shaping the future of technology.