justineanweiler.com – Neural networks are a cornerstone of modern artificial intelligence (AI), powering applications ranging from image recognition and natural language processing to autonomous vehicles and medical diagnostics. Modeled loosely on the human brain, neural networks are designed to recognize patterns and make decisions based on data. In this article, we will explore the fundamentals of neural networks, their structure, types, and applications.

What Are Neural Networks?

At their core, neural networks are computational models inspired by the structure and function of biological neurons. They consist of layers of interconnected nodes, or “neurons,” which process information in a way that mimics how the human brain works. These models are capable of learning from data and improving their performance over time, making them invaluable for complex tasks.

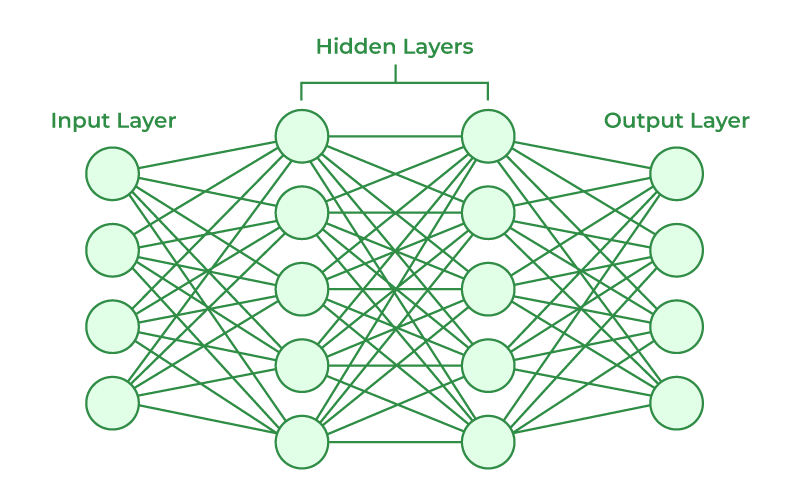

A neural network typically consists of three main layers:

- Input Layer: This layer receives raw data, such as images, text, or numerical values.

- Hidden Layers: These layers perform complex computations and extract features from the data. The number of hidden layers and their size determine the depth and complexity of the network.

- Output Layer: This layer produces the final output, such as a classification label or a prediction.

Each connection between neurons is associated with a weight, which determines the importance of the input data. During training, the network adjusts these weights to minimize errors and improve accuracy.

How Do Neural Networks Work?

The functioning of a neural network involves two main processes: forward propagation and backpropagation.

- Forward Propagation: Data flows through the network from the input layer to the output layer. Each neuron applies an activation function to determine its output, which is then passed to the next layer.

- Backpropagation: After the network generates an output, it compares the result with the expected output to calculate an error. The network then adjusts the weights of the connections using an optimization algorithm, such as gradient descent, to reduce the error. This iterative process continues until the network achieves the desired accuracy.

Types of Neural Networks

Neural networks come in various architectures, each suited for specific tasks:

- Feedforward Neural Networks (FNN): The simplest type of neural network, where data flows in one direction from input to output.

- Convolutional Neural Networks (CNN): Specialized for processing grid-like data, such as images. They use convolutional layers to detect features like edges, shapes, and textures.

- Recurrent Neural Networks (RNN): Designed for sequential data, such as time series or text. They have loops that allow them to maintain information about previous inputs.

- Generative Adversarial Networks (GAN): Consist of two networks—a generator and a discriminator—that compete against each other to create realistic data, such as images or videos.

- Transformer Networks: Commonly used in natural language processing tasks, these networks use self-attention mechanisms to process sequences of data.

Applications of Neural Networks

Neural networks have revolutionized numerous fields, including:

- Computer Vision: Used in facial recognition, object detection, and medical imaging.

- Natural Language Processing (NLP): Powering chatbots, language translation, and sentiment analysis.

- Healthcare: Assisting in disease diagnosis, drug discovery, and personalized medicine.

- Finance: Employed in fraud detection, stock price prediction, and risk assessment.

- Autonomous Systems: Enabling self-driving cars and drones to navigate and make decisions.

Challenges and Future Directions

Despite their success, neural networks face challenges such as:

- Data Dependency: They require large amounts of labeled data for training.

- Computational Cost: Training deep networks can be resource-intensive.

- Interpretability: Neural networks often function as “black boxes,” making it difficult to understand their decision-making process.

Researchers are working to address these issues by developing more efficient architectures, improving explainability, and exploring methods like transfer learning and federated learning.

Conclusion

Neural networks have transformed the AI landscape, enabling breakthroughs in diverse domains. As research continues, these models are expected to become more efficient, interpretable, and accessible, driving innovation and solving some of the world’s most complex problems. By understanding the fundamentals of neural networks, we can better appreciate their potential and the opportunities they offer for the future.